07

Apr 2021

What is AI Governance & Why Do We Need It?

by Amish Chudasama, Vice President Research & Development

Engineers love it. Corporate Boards like it. Customers depend on it. Even POTUS recently signed an executive order on “Promoting the Use of Trustworthy Artificial Intelligence in the Federal Government.” Undoubtedly, AI has remarkable capabilities that enable us to solve complex macro to micro-level challenges. But as new AI applications and proofs of concept (POCs) move from test labs into full integration with enterprise systems, leaders must address AI-related governance questions sooner rather than later.

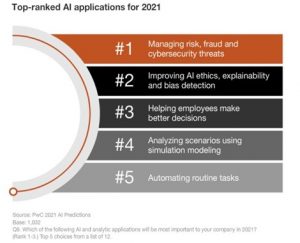

Adoption of AI technologies by private and public sector enterprises is well underway. PwC’s “AI predictions 2021” survey respondents say they already use AI applications to:

- Manage fraud, waste, abuse, and cybersecurity threats

- Improve AI ethics, explainability, and bias detection

- Help employees make better decisions

- Analyze scenarios using simulation modeling

- Automate routine tasks

But Artificial intelligence (AI) driven decisions are only as good as the quality of governance over AI models and the data used to train them. Gartner predicts by 2023, up to 70% of commercial AI products that do not have transparent, ethical processes as part of a governance strategy, will be stopped due to public opposition or activism.

What is AI Governance?

AI governance is a framework that proposes how stakeholders can best safeguard the research, design, and use of machine learning (ML) algorithms and AI in decision-making. AI governance is not simply a matter of meeting compliance requirements. It requires attentive care and feeding and adequate oversight to ensure its equitable and ethical use. For example, AI algorithms already impact who does (or doesn’t) get a job interview, government benefits, credit, loans, and medical services. People create algorithms that, if left unscrutinized, ultimately reflect the characteristics of their makers’ biases – and the quality and timeliness of the data they ingest. Because these mathematical constructs and historical data cannot understand what is “equitable” by today’s standards, public and private sector leaders, algorithm designers, technologists, and citizens have an ethical and professional responsibility to screen for bias and prevent, monitor, and mitigate “drift and bias” in AI/ML models. Results from biased models and data sets– whether the bias is conscious or unconscious – can adversely impact individuals, especially government agency missions and programs, businesses, and society.

In AI We Trust…Not So Fast

AI solutions to address problems and modernize systems can positively and negatively impact society in massive, untold ways. How does one trust an AI model to produce a “correct” answer? The question is, correct according to whom or which model or algorithm and in what context? Without FDA-like oversight authority, how can government AI models equitably reflect and be, as Abraham Lincoln famously said, “of the people, by the people, for the people”? To demonstrate credibility to business and public sector leaders and the public, AI models must consistently deliver trustworthy responses. Much of AI models’ bias comes from the data used for training the algorithms. Training can be supervised or unsupervised, and each machine learning approach can reflect bias if proper governance practices are not in place. For example, facial recognition models are typically trained to recognize light-skinned people of European descent instead of darker-skinned people. This bias means the model is more likely to produce accurate results for light-colored skin and false positives for people with dark-colored skin who are under-represented in facial recognition data. Consider these other publicly known incidents of bias in AI models:

- Amazon reigned in its recruitment bot due to its sexist hiring algorithm.

- Google has been hit hard by negative publicity related to Timnit Gebru and Margaret Mitchell’s publicly voiced concerns about bias in their models and toxicity in Google’s AI systems.

- Steve Wozniak, inventor of the Apple-1 computer with Steve Jobs, got 10x the credit limit his wife did for Apple’s credit card due to a biased algorithm.

AI initiatives shaped early by a well-thought-out, effective governance strategy reduces the risk and costs associated with fixing foundational bias later.

Critical Principles of AI Governance

With a strong AI governance plan in place, organizations using AI/ML can prevent reputational damage, wasted investments on inherently biased models, and poor or inaccurate results. Here is an introduction to six fundamental principles of good AI governance to follow: explainability, transparency, interpretability, fairness, privacy and security, and accountability. Look for future Reveal blogs that cover these AI governance principles in more detail.

- Explainability or explainable AI (XAI) means the methods, techniques, and results (e.g., classification) from an AI solution must be articulated in understandably human terms. A human should understand what actions an AI model took, are taking, and will be taking to generate a decision or results. A human should understand these actions to confirm existing knowledge, challenge knowledge, and adjust assumptions used in the model to mitigate bias. XAI uses “white-box” machine learning (ML) models that generate results easily understood by domain experts. Alternatively, “Black box” ML models are opaque, and their complexity makes them hard to understand, let alone explain or trust.

- Transparency is the AI model designer’s ability to describe the data extraction parameters for training data and the processes applied to that data in easy-to-understand language.

- Interpretability means humans must be able to translate and clearly convey the basis for decision-making in the AI model.

- Fairness ensures AI systems treat all people fairly and are not biased toward any specific group.

- Privacy and Security should protect people’s privacy and produce results without posing security risks.

- Accountability ensures AI systems and their makers are held accountable for what they produce.

- Be beneficial means AI should contribute to society and people in positive ways.

Critical AI Governance Questions to Answer

By 2024, Gartner predicts 60% of AI providers will include a means to mitigate possible harm as part of their technologies. Similarly, by 2023, analysts predict all personnel hired for AI development and training work will have to demonstrate expertise in the responsible development of AI. These predictions suggest enterprises would be wise to invest in AI governance planning now to answer these critical questions about results from and decision-making parameters of AI applications:

- Who is accountable? If there are biases or mistakes found in an AI model, who is to correct them, and by what standards are they to use? What consequences are there, and for whom?

- How does AI align with your strategy? Consider where AI is essential to or can enhance your business or mission success.

- For example, could AI improve and automate operational processes to gain efficiency? Are network threats recognized early enough to prevent downtime? Are you alerted as to when machinery will fail so you can fix it before there is an impact on your mission or business?

- What processes could be modified or automated to improve the AI results?

- What governance, performance, and security controls are needed to flag faulty AI models?

- Are the AI models’ results consistent, unbiased, and reproducible?

If you need help answering these or other AI governance or AI/ML solution questions or are looking for a solution to protect your investment in complex enterprise systems with AI algorithms embedded, contact a Reveal AI Governance expert to discuss your needs.

Join us next week for the second blog in our series of blogs on AI governance.